import zarr

import numpy as np

from matplotlib import pyplot as plt

import moraine.cli as mc

import moraine as mrPoint Cloud data utility

gix2bool

gix2bool (gix:str, is_pc:str, shape:tuple[int], chunks:tuple[int]=(1000, 1000))

Convert pc grid index to bool 2d array

| Type | Default | Details | |

|---|---|---|---|

| gix | str | point cloud grid index | |

| is_pc | str | output, output bool array | |

| shape | tuple | shape of one image (nlines,width) | |

| chunks | tuple | (1000, 1000) | output chunk size |

bool2gix

bool2gix (is_pc:str, gix:str, chunks:int=100000)

Convert bool 2d array to grid index

| Type | Default | Details | |

|---|---|---|---|

| is_pc | str | input bool array | |

| gix | str | output, point cloud grid index | |

| chunks | int | 100000 | output point chunk size |

ras2pc

ras2pc (idx:str, ras:str|list, pc:str|list, chunks:int=None, processes=False, n_workers=1, threads_per_worker=1, **dask_cluster_arg)

Convert raster data to point cloud data

| Type | Default | Details | |

|---|---|---|---|

| idx | str | point cloud grid index or hillbert index | |

| ras | str | list | path (in string) or list of path for raster data | |

| pc | str | list | output, path (in string) or list of path for point cloud data | |

| chunks | int | None | output point chunk size, same as gix by default |

| processes | bool | False | use process for dask worker or thread |

| n_workers | int | 1 | number of dask worker |

| threads_per_worker | int | 1 | number of threads per dask worker |

| dask_cluster_arg | VAR_KEYWORD |

Usage:

logger = mc.get_logger()ras_data1 = np.random.rand(100,100).astype(np.float32)

ras_data2 = np.random.rand(100,100,3).astype(np.float32)+1j*np.random.rand(100,100,3).astype(np.float32)

gix = np.random.choice(np.arange(100*100,dtype=np.int32),size=1000,replace=False)

gix.sort()

gix = np.stack(np.unravel_index(gix,shape=(100,100)),axis=-1).astype(np.int32)

pc_data1 = ras_data1[gix[:,0],gix[:,1]]

pc_data2 = ras_data2[gix[:,0],gix[:,1]]

gix_zarr = zarr.open('pc/gix.zarr',mode='w',shape=gix.shape,dtype=gix.dtype,chunks=(200,1))

ras_zarr1 = zarr.open('pc/ras1.zarr',mode='w',shape=ras_data1.shape,dtype=ras_data1.dtype,chunks=(20,100))

ras_zarr2 = zarr.open('pc/ras2.zarr',mode='w',shape=ras_data2.shape,dtype=ras_data2.dtype,chunks=(20,100,1))

gix_zarr[:] = gix

ras_zarr1[:] = ras_data1

ras_zarr2[:] = ras_data2ras2pc('pc/gix.zarr','pc/ras1.zarr','pc/pc1.zarr')

pc_zarr1 = zarr.open('pc/pc1.zarr',mode='r')

np.testing.assert_array_equal(pc_data1,pc_zarr1[:])

ras2pc('pc/gix.zarr',ras=['pc/ras1.zarr','pc/ras2.zarr'],pc=['pc/pc1.zarr','pc/pc2.zarr'])

pc_zarr1 = zarr.open('pc/pc1.zarr',mode='r')

pc_zarr2 = zarr.open('pc/pc2.zarr',mode='r')

np.testing.assert_array_equal(pc_data1,pc_zarr1[:])

np.testing.assert_array_equal(pc_data2,pc_zarr2[:])2025-09-18 12:28:18 - log_args - INFO - running function: ras2pc

2025-09-18 12:28:18 - log_args - INFO - fetching args:

2025-09-18 12:28:18 - log_args - INFO - idx = 'pc/gix.zarr'

2025-09-18 12:28:18 - log_args - INFO - ras = 'pc/ras1.zarr'

2025-09-18 12:28:18 - log_args - INFO - pc = 'pc/pc1.zarr'

2025-09-18 12:28:18 - log_args - INFO - chunks = None

2025-09-18 12:28:18 - log_args - INFO - processes = False

2025-09-18 12:28:18 - log_args - INFO - n_workers = 1

2025-09-18 12:28:18 - log_args - INFO - threads_per_worker = 1

2025-09-18 12:28:18 - log_args - INFO - dask_cluster_arg = {}

2025-09-18 12:28:18 - log_args - INFO - fetching args done.

2025-09-18 12:28:18 - zarr_info - INFO - pc/gix.zarr zarray shape, chunks, dtype: (1000, 2), (200, 1), int32

2025-09-18 12:28:18 - ras2pc - INFO - loading gix into memory.

2025-09-18 12:28:18 - ras2pc - INFO - starting dask local cluster.

2025-09-18 12:28:20 - ras2pc - INFO - dask local cluster started.

2025-09-18 12:28:20 - dask_cluster_info - INFO - dask cluster: LocalCluster(dashboard_link='http://10.211.48.4:8787/status', workers=1, threads=1, memory=256.00 GiB)

2025-09-18 12:28:20 - ras2pc - INFO - start to slice on pc/ras1.zarr

2025-09-18 12:28:20 - zarr_info - INFO - pc/ras1.zarr zarray shape, chunks, dtype: (100, 100), (20, 100), float32

2025-09-18 12:28:20 - darr_info - INFO - ras dask array shape, chunksize, dtype: (100, 100), (100, 100), float32

2025-09-18 12:28:20 - darr_info - INFO - pc dask array shape, chunksize, dtype: (1000,), (1000,), float32

2025-09-18 12:28:20 - ras2pc - INFO - saving to pc/pc1.zarr.

2025-09-18 12:28:20 - zarr_info - INFO - pc/pc1.zarr zarray shape, chunks, dtype: (1000,), (200,), float32

2025-09-18 12:28:20 - ras2pc - INFO - computing graph setted. doing all the computing.

2025-09-18 12:28:21 - ras2pc - INFO - computing finished.ed | 0.1s

2025-09-18 12:28:21 - ras2pc - INFO - dask cluster closed.

2025-09-18 12:28:21 - log_args - INFO - running function: ras2pc

2025-09-18 12:28:21 - log_args - INFO - fetching args:

2025-09-18 12:28:21 - log_args - INFO - idx = 'pc/gix.zarr'

2025-09-18 12:28:21 - log_args - INFO - ras = ['pc/ras1.zarr', 'pc/ras2.zarr']

2025-09-18 12:28:21 - log_args - INFO - pc = ['pc/pc1.zarr', 'pc/pc2.zarr']

2025-09-18 12:28:21 - log_args - INFO - chunks = None

2025-09-18 12:28:21 - log_args - INFO - processes = False

2025-09-18 12:28:21 - log_args - INFO - n_workers = 1

2025-09-18 12:28:21 - log_args - INFO - threads_per_worker = 1

2025-09-18 12:28:21 - log_args - INFO - dask_cluster_arg = {}

2025-09-18 12:28:21 - log_args - INFO - fetching args done.

2025-09-18 12:28:21 - zarr_info - INFO - pc/gix.zarr zarray shape, chunks, dtype: (1000, 2), (200, 1), int32

2025-09-18 12:28:21 - ras2pc - INFO - loading gix into memory.

2025-09-18 12:28:21 - ras2pc - INFO - starting dask local cluster.

2025-09-18 12:28:21 - ras2pc - INFO - dask local cluster started.

2025-09-18 12:28:21 - dask_cluster_info - INFO - dask cluster: LocalCluster(dashboard_link='http://10.211.48.4:8787/status', workers=1, threads=1, memory=256.00 GiB)

2025-09-18 12:28:21 - ras2pc - INFO - start to slice on pc/ras1.zarr

2025-09-18 12:28:21 - zarr_info - INFO - pc/ras1.zarr zarray shape, chunks, dtype: (100, 100), (20, 100), float32

2025-09-18 12:28:21 - darr_info - INFO - ras dask array shape, chunksize, dtype: (100, 100), (100, 100), float32

2025-09-18 12:28:21 - darr_info - INFO - pc dask array shape, chunksize, dtype: (1000,), (1000,), float32

2025-09-18 12:28:21 - ras2pc - INFO - saving to pc/pc1.zarr.

2025-09-18 12:28:21 - zarr_info - INFO - pc/pc1.zarr zarray shape, chunks, dtype: (1000,), (200,), float32

2025-09-18 12:28:21 - ras2pc - INFO - start to slice on pc/ras2.zarr

2025-09-18 12:28:21 - zarr_info - INFO - pc/ras2.zarr zarray shape, chunks, dtype: (100, 100, 3), (20, 100, 1), complex64

2025-09-18 12:28:21 - darr_info - INFO - ras dask array shape, chunksize, dtype: (100, 100, 3), (100, 100, 1), complex64

2025-09-18 12:28:21 - darr_info - INFO - pc dask array shape, chunksize, dtype: (1000, 3), (1000, 1), complex64

2025-09-18 12:28:21 - ras2pc - INFO - saving to pc/pc2.zarr.

2025-09-18 12:28:21 - zarr_info - INFO - pc/pc2.zarr zarray shape, chunks, dtype: (1000, 3), (200, 1), complex64

2025-09-18 12:28:21 - ras2pc - INFO - computing graph setted. doing all the computing.

2025-09-18 12:28:21 - ras2pc - INFO - computing finished.ed | 0.1s

2025-09-18 12:28:21 - ras2pc - INFO - dask cluster closed.pc_concat

pc_concat (pcs:list|str, pc:list|str, key:list|str=None, chunks:int=None, processes=False, n_workers=1, threads_per_worker=1, **dask_cluster_arg)

concatenate (and sort) point cloud dataset.

| Type | Default | Details | |

|---|---|---|---|

| pcs | list | str | list of path to pc or directory that hold one pc, or a list of that | |

| pc | list | str | output, path of output or a list of that | |

| key | list | str | None | keys that sort the pc data, no sort by default |

| chunks | int | None | pc chunk size in output data, optional, same as first pc in pcs by default |

| processes | bool | False | use process for dask worker or thread |

| n_workers | int | 1 | number of dask worker |

| threads_per_worker | int | 1 | number of threads per dask worker |

| dask_cluster_arg | VAR_KEYWORD |

pc_data = np.random.rand(1000,3).astype(np.float32)+1j*np.random.rand(1000,3).astype(np.float32)

pc1_zarr = zarr.open('pc/pc1.zarr',mode='w',shape=(300,3),dtype=pc_data.dtype,chunks=(300,1))

pc2_zarr = zarr.open('pc/pc2.zarr',mode='w',shape=(700,3),dtype=pc_data.dtype,chunks=(700,1))

pc1_zarr[:] = pc_data[:300]

pc2_zarr[:] = pc_data[300:]pc_concat(['pc/pc1.zarr','pc/pc2.zarr'],'pc/pc.zarr',chunks=500)

np.testing.assert_array_equal(zarr.open('pc/pc.zarr',mode='r')[:],pc_data)2025-09-18 12:28:21 - log_args - INFO - running function: pc_concat

2025-09-18 12:28:21 - log_args - INFO - fetching args:

2025-09-18 12:28:21 - log_args - INFO - pcs = ['pc/pc1.zarr', 'pc/pc2.zarr']

2025-09-18 12:28:21 - log_args - INFO - pc = 'pc/pc.zarr'

2025-09-18 12:28:21 - log_args - INFO - key = None

2025-09-18 12:28:21 - log_args - INFO - chunks = 500

2025-09-18 12:28:21 - log_args - INFO - processes = False

2025-09-18 12:28:21 - log_args - INFO - n_workers = 1

2025-09-18 12:28:21 - log_args - INFO - threads_per_worker = 1

2025-09-18 12:28:21 - log_args - INFO - dask_cluster_arg = {}

2025-09-18 12:28:21 - log_args - INFO - fetching args done.

2025-09-18 12:28:21 - pc_concat - INFO - input pcs: [['pc/pc1.zarr', 'pc/pc2.zarr']]

2025-09-18 12:28:21 - pc_concat - INFO - output pc: ['pc/pc.zarr']

2025-09-18 12:28:21 - pc_concat - INFO - starting dask local cluster.

2025-09-18 12:28:21 - pc_concat - INFO - dask local cluster started.

2025-09-18 12:28:21 - dask_cluster_info - INFO - dask cluster: LocalCluster(dashboard_link='http://10.211.48.4:8787/status', workers=1, threads=1, memory=256.00 GiB)

2025-09-18 12:28:21 - pc_concat - INFO - read pc from ['pc/pc1.zarr', 'pc/pc2.zarr']

2025-09-18 12:28:21 - darr_info - INFO - concatenated pc dask array shape, chunksize, dtype: (1000, 3), (1000, 1), complex64

2025-09-18 12:28:21 - pc_concat - INFO - save pc to pc/pc.zarr

2025-09-18 12:28:21 - zarr_info - INFO - pc/pc.zarr zarray shape, chunks, dtype: (1000, 3), (500, 1), complex64

2025-09-18 12:28:21 - pc_concat - INFO - computing graph setted. doing all the computing.

2025-09-18 12:28:21 - pc_concat - INFO - computing finished.| 0.1s

2025-09-18 12:28:21 - pc_concat - INFO - dask cluster closed.ras2pc_ras_chunk

ras2pc_ras_chunk (gix:str, ras:str|list, pc:str|list, key:str, chunks:tuple=None, processes=False, n_workers=1, threads_per_worker=1, **dask_cluster_arg)

Convert raster data to point cloud data that sorted by ras chunk

| Type | Default | Details | |

|---|---|---|---|

| gix | str | point cloud grid index | |

| ras | str | list | path (in string) or list of path for raster data | |

| pc | str | list | output, path (directory) or list of path for point cloud data | |

| key | str | output, path for the key to sort generated pc in the directory back to gix order | |

| chunks | tuple | None | ras chunks, same as the first ras by default |

| processes | bool | False | use process for dask worker or thread |

| n_workers | int | 1 | number of dask worker |

| threads_per_worker | int | 1 | number of threads per dask worker |

| dask_cluster_arg | VAR_KEYWORD |

ras2pc_ras_chunk('pc/gix.zarr','pc/ras1.zarr','pc/pc1',key='pc/key.zarr')

pc_concat('pc/pc1','pc/pc1.zarr',key='pc/key.zarr',chunks=200)

pc_zarr1 = zarr.open('pc/pc1.zarr',mode='r')

np.testing.assert_array_equal(pc_data1,pc_zarr1[:])

ras2pc_ras_chunk('pc/gix.zarr',ras=['pc/ras1.zarr','pc/ras2.zarr'],pc=['pc/pc1','pc/pc2'],key='pc/key.zarr')

pc_concat('pc/pc1','pc/pc1.zarr',key='pc/key.zarr',chunks=200)

pc_concat('pc/pc2','pc/pc2.zarr',key='pc/key.zarr',chunks=200)

pc_zarr1 = zarr.open('pc/pc1.zarr',mode='r')

pc_zarr2 = zarr.open('pc/pc2.zarr',mode='r')

np.testing.assert_array_equal(pc_data1,pc_zarr1[:])

np.testing.assert_array_equal(pc_data2,pc_zarr2[:])2025-09-18 12:28:21 - log_args - INFO - running function: ras2pc_ras_chunk

2025-09-18 12:28:21 - log_args - INFO - fetching args:

2025-09-18 12:28:21 - log_args - INFO - gix = 'pc/gix.zarr'

2025-09-18 12:28:21 - log_args - INFO - ras = 'pc/ras1.zarr'

2025-09-18 12:28:21 - log_args - INFO - pc = 'pc/pc1'

2025-09-18 12:28:21 - log_args - INFO - key = 'pc/key.zarr'

2025-09-18 12:28:21 - log_args - INFO - chunks = None

2025-09-18 12:28:21 - log_args - INFO - processes = False

2025-09-18 12:28:21 - log_args - INFO - n_workers = 1

2025-09-18 12:28:21 - log_args - INFO - threads_per_worker = 1

2025-09-18 12:28:21 - log_args - INFO - dask_cluster_arg = {}

2025-09-18 12:28:21 - log_args - INFO - fetching args done.

2025-09-18 12:28:21 - zarr_info - INFO - pc/gix.zarr zarray shape, chunks, dtype: (1000, 2), (200, 1), int32

2025-09-18 12:28:21 - ras2pc_ras_chunk - INFO - loading gix into memory.

2025-09-18 12:28:21 - ras2pc_ras_chunk - INFO - convert gix to the order of ras chunk

2025-09-18 12:28:28 - ras2pc_ras_chunk - INFO - save key

2025-09-18 12:28:28 - ras2pc_ras_chunk - INFO - starting dask local cluster.

2025-09-18 12:28:29 - ras2pc_ras_chunk - INFO - dask local cluster started.

2025-09-18 12:28:29 - dask_cluster_info - INFO - dask cluster: LocalCluster(dashboard_link='http://10.211.48.4:8787/status', workers=1, threads=1, memory=256.00 GiB)

2025-09-18 12:28:29 - ras2pc_ras_chunk - INFO - start to slice on pc/ras1.zarr

2025-09-18 12:28:29 - zarr_info - INFO - pc/ras1.zarr zarray shape, chunks, dtype: (100, 100), (20, 20), float32

2025-09-18 12:28:29 - darr_info - INFO - ras dask array shape, chunksize, dtype: (100, 100), (20, 20), float32

2025-09-18 12:28:29 - darr_info - INFO - pc dask array shape, chunksize, dtype: (1000,), (49,), float32

2025-09-18 12:28:29 - ras2pc_ras_chunk - INFO - saving to pc/pc1.

2025-09-18 12:28:29 - ras2pc_ras_chunk - INFO - computing graph setted. doing all the computing.

2025-09-18 12:28:29 - ras2pc_ras_chunk - INFO - computing finished.

2025-09-18 12:28:29 - ras2pc_ras_chunk - INFO - dask cluster closed.

2025-09-18 12:28:29 - log_args - INFO - running function: pc_concat

2025-09-18 12:28:29 - log_args - INFO - fetching args:

2025-09-18 12:28:29 - log_args - INFO - pcs = 'pc/pc1'

2025-09-18 12:28:29 - log_args - INFO - pc = 'pc/pc1.zarr'

2025-09-18 12:28:29 - log_args - INFO - key = 'pc/key.zarr'

2025-09-18 12:28:29 - log_args - INFO - chunks = 200

2025-09-18 12:28:29 - log_args - INFO - processes = False

2025-09-18 12:28:29 - log_args - INFO - n_workers = 1

2025-09-18 12:28:29 - log_args - INFO - threads_per_worker = 1

2025-09-18 12:28:29 - log_args - INFO - dask_cluster_arg = {}

2025-09-18 12:28:29 - log_args - INFO - fetching args done.

2025-09-18 12:28:29 - pc_concat - INFO - input pcs: [[Path('pc/pc1/0.zarr'), Path('pc/pc1/1.zarr'), Path('pc/pc1/2.zarr'), Path('pc/pc1/3.zarr'), Path('pc/pc1/4.zarr'), Path('pc/pc1/5.zarr'), Path('pc/pc1/6.zarr'), Path('pc/pc1/7.zarr'), Path('pc/pc1/8.zarr'), Path('pc/pc1/9.zarr'), Path('pc/pc1/10.zarr'), Path('pc/pc1/11.zarr'), Path('pc/pc1/12.zarr'), Path('pc/pc1/13.zarr'), Path('pc/pc1/14.zarr'), Path('pc/pc1/15.zarr'), Path('pc/pc1/16.zarr'), Path('pc/pc1/17.zarr'), Path('pc/pc1/18.zarr'), Path('pc/pc1/19.zarr'), Path('pc/pc1/20.zarr'), Path('pc/pc1/21.zarr'), Path('pc/pc1/22.zarr'), Path('pc/pc1/23.zarr'), Path('pc/pc1/24.zarr')]]

2025-09-18 12:28:29 - pc_concat - INFO - output pc: ['pc/pc1.zarr']

2025-09-18 12:28:29 - pc_concat - INFO - load key

2025-09-18 12:28:29 - zarr_info - INFO - pc/key.zarr zarray shape, chunks, dtype: (1000,), (200,), int64

2025-09-18 12:28:30 - pc_concat - INFO - starting dask local cluster.

2025-09-18 12:28:30 - pc_concat - INFO - dask local cluster started.

2025-09-18 12:28:30 - dask_cluster_info - INFO - dask cluster: LocalCluster(dashboard_link='http://10.211.48.4:8787/status', workers=1, threads=1, memory=256.00 GiB)

2025-09-18 12:28:30 - pc_concat - INFO - read pc from [Path('pc/pc1/0.zarr'), Path('pc/pc1/1.zarr'), Path('pc/pc1/2.zarr'), Path('pc/pc1/3.zarr'), Path('pc/pc1/4.zarr'), Path('pc/pc1/5.zarr'), Path('pc/pc1/6.zarr'), Path('pc/pc1/7.zarr'), Path('pc/pc1/8.zarr'), Path('pc/pc1/9.zarr'), Path('pc/pc1/10.zarr'), Path('pc/pc1/11.zarr'), Path('pc/pc1/12.zarr'), Path('pc/pc1/13.zarr'), Path('pc/pc1/14.zarr'), Path('pc/pc1/15.zarr'), Path('pc/pc1/16.zarr'), Path('pc/pc1/17.zarr'), Path('pc/pc1/18.zarr'), Path('pc/pc1/19.zarr'), Path('pc/pc1/20.zarr'), Path('pc/pc1/21.zarr'), Path('pc/pc1/22.zarr'), Path('pc/pc1/23.zarr'), Path('pc/pc1/24.zarr')]

2025-09-18 12:28:30 - darr_info - INFO - concatenated pc dask array shape, chunksize, dtype: (1000,), (1000,), float32

2025-09-18 12:28:30 - pc_concat - INFO - sort pc according to key

2025-09-18 12:28:30 - darr_info - INFO - sorted pc dask array shape, chunksize, dtype: (1000,), (1000,), float32

2025-09-18 12:28:30 - pc_concat - INFO - save pc to pc/pc1.zarr

2025-09-18 12:28:30 - zarr_info - INFO - pc/pc1.zarr zarray shape, chunks, dtype: (1000,), (200,), float32

2025-09-18 12:28:30 - pc_concat - INFO - computing graph setted. doing all the computing.

2025-09-18 12:28:30 - pc_concat - INFO - computing finished.| 0.1s

2025-09-18 12:28:30 - pc_concat - INFO - dask cluster closed.

2025-09-18 12:28:30 - log_args - INFO - running function: ras2pc_ras_chunk

2025-09-18 12:28:30 - log_args - INFO - fetching args:

2025-09-18 12:28:30 - log_args - INFO - gix = 'pc/gix.zarr'

2025-09-18 12:28:30 - log_args - INFO - ras = ['pc/ras1.zarr', 'pc/ras2.zarr']

2025-09-18 12:28:30 - log_args - INFO - pc = ['pc/pc1', 'pc/pc2']

2025-09-18 12:28:30 - log_args - INFO - key = 'pc/key.zarr'

2025-09-18 12:28:30 - log_args - INFO - chunks = None

2025-09-18 12:28:30 - log_args - INFO - processes = False

2025-09-18 12:28:30 - log_args - INFO - n_workers = 1

2025-09-18 12:28:30 - log_args - INFO - threads_per_worker = 1

2025-09-18 12:28:30 - log_args - INFO - dask_cluster_arg = {}

2025-09-18 12:28:30 - log_args - INFO - fetching args done.

2025-09-18 12:28:30 - zarr_info - INFO - pc/gix.zarr zarray shape, chunks, dtype: (1000, 2), (200, 1), int32

2025-09-18 12:28:30 - ras2pc_ras_chunk - INFO - loading gix into memory.

2025-09-18 12:28:30 - ras2pc_ras_chunk - INFO - convert gix to the order of ras chunk

2025-09-18 12:28:30 - ras2pc_ras_chunk - INFO - save key

2025-09-18 12:28:30 - ras2pc_ras_chunk - INFO - starting dask local cluster.

2025-09-18 12:28:30 - ras2pc_ras_chunk - INFO - dask local cluster started.

2025-09-18 12:28:30 - dask_cluster_info - INFO - dask cluster: LocalCluster(dashboard_link='http://10.211.48.4:8787/status', workers=1, threads=1, memory=256.00 GiB)

2025-09-18 12:28:30 - ras2pc_ras_chunk - INFO - start to slice on pc/ras1.zarr

2025-09-18 12:28:30 - zarr_info - INFO - pc/ras1.zarr zarray shape, chunks, dtype: (100, 100), (20, 20), float32

2025-09-18 12:28:30 - darr_info - INFO - ras dask array shape, chunksize, dtype: (100, 100), (20, 20), float32

2025-09-18 12:28:30 - darr_info - INFO - pc dask array shape, chunksize, dtype: (1000,), (49,), float32

2025-09-18 12:28:30 - ras2pc_ras_chunk - INFO - saving to pc/pc1.

2025-09-18 12:28:30 - ras2pc_ras_chunk - INFO - start to slice on pc/ras2.zarr

2025-09-18 12:28:30 - zarr_info - INFO - pc/ras2.zarr zarray shape, chunks, dtype: (100, 100, 3), (20, 20, 1), complex64

2025-09-18 12:28:30 - darr_info - INFO - ras dask array shape, chunksize, dtype: (100, 100, 3), (20, 20, 3), complex64

2025-09-18 12:28:30 - darr_info - INFO - pc dask array shape, chunksize, dtype: (1000, 3), (49, 3), complex64

2025-09-18 12:28:30 - ras2pc_ras_chunk - INFO - saving to pc/pc2.

2025-09-18 12:28:31 - ras2pc_ras_chunk - INFO - computing graph setted. doing all the computing.

2025-09-18 12:28:32 - ras2pc_ras_chunk - INFO - computing finished.

2025-09-18 12:28:32 - ras2pc_ras_chunk - INFO - dask cluster closed.

2025-09-18 12:28:32 - log_args - INFO - running function: pc_concat

2025-09-18 12:28:32 - log_args - INFO - fetching args:

2025-09-18 12:28:32 - log_args - INFO - pcs = 'pc/pc1'

2025-09-18 12:28:32 - log_args - INFO - pc = 'pc/pc1.zarr'

2025-09-18 12:28:32 - log_args - INFO - key = 'pc/key.zarr'

2025-09-18 12:28:32 - log_args - INFO - chunks = 200

2025-09-18 12:28:32 - log_args - INFO - processes = False

2025-09-18 12:28:32 - log_args - INFO - n_workers = 1

2025-09-18 12:28:32 - log_args - INFO - threads_per_worker = 1

2025-09-18 12:28:32 - log_args - INFO - dask_cluster_arg = {}

2025-09-18 12:28:32 - log_args - INFO - fetching args done.

2025-09-18 12:28:32 - pc_concat - INFO - input pcs: [[Path('pc/pc1/0.zarr'), Path('pc/pc1/1.zarr'), Path('pc/pc1/2.zarr'), Path('pc/pc1/3.zarr'), Path('pc/pc1/4.zarr'), Path('pc/pc1/5.zarr'), Path('pc/pc1/6.zarr'), Path('pc/pc1/7.zarr'), Path('pc/pc1/8.zarr'), Path('pc/pc1/9.zarr'), Path('pc/pc1/10.zarr'), Path('pc/pc1/11.zarr'), Path('pc/pc1/12.zarr'), Path('pc/pc1/13.zarr'), Path('pc/pc1/14.zarr'), Path('pc/pc1/15.zarr'), Path('pc/pc1/16.zarr'), Path('pc/pc1/17.zarr'), Path('pc/pc1/18.zarr'), Path('pc/pc1/19.zarr'), Path('pc/pc1/20.zarr'), Path('pc/pc1/21.zarr'), Path('pc/pc1/22.zarr'), Path('pc/pc1/23.zarr'), Path('pc/pc1/24.zarr')]]

2025-09-18 12:28:32 - pc_concat - INFO - output pc: ['pc/pc1.zarr']

2025-09-18 12:28:32 - pc_concat - INFO - load key

2025-09-18 12:28:32 - zarr_info - INFO - pc/key.zarr zarray shape, chunks, dtype: (1000,), (200,), int64

2025-09-18 12:28:32 - pc_concat - INFO - starting dask local cluster.

2025-09-18 12:28:32 - pc_concat - INFO - dask local cluster started.

2025-09-18 12:28:32 - dask_cluster_info - INFO - dask cluster: LocalCluster(dashboard_link='http://10.211.48.4:8787/status', workers=1, threads=1, memory=256.00 GiB)

2025-09-18 12:28:32 - pc_concat - INFO - read pc from [Path('pc/pc1/0.zarr'), Path('pc/pc1/1.zarr'), Path('pc/pc1/2.zarr'), Path('pc/pc1/3.zarr'), Path('pc/pc1/4.zarr'), Path('pc/pc1/5.zarr'), Path('pc/pc1/6.zarr'), Path('pc/pc1/7.zarr'), Path('pc/pc1/8.zarr'), Path('pc/pc1/9.zarr'), Path('pc/pc1/10.zarr'), Path('pc/pc1/11.zarr'), Path('pc/pc1/12.zarr'), Path('pc/pc1/13.zarr'), Path('pc/pc1/14.zarr'), Path('pc/pc1/15.zarr'), Path('pc/pc1/16.zarr'), Path('pc/pc1/17.zarr'), Path('pc/pc1/18.zarr'), Path('pc/pc1/19.zarr'), Path('pc/pc1/20.zarr'), Path('pc/pc1/21.zarr'), Path('pc/pc1/22.zarr'), Path('pc/pc1/23.zarr'), Path('pc/pc1/24.zarr')]

2025-09-18 12:28:32 - darr_info - INFO - concatenated pc dask array shape, chunksize, dtype: (1000,), (1000,), float32

2025-09-18 12:28:32 - pc_concat - INFO - sort pc according to key

2025-09-18 12:28:32 - darr_info - INFO - sorted pc dask array shape, chunksize, dtype: (1000,), (1000,), float32

2025-09-18 12:28:32 - pc_concat - INFO - save pc to pc/pc1.zarr

2025-09-18 12:28:32 - zarr_info - INFO - pc/pc1.zarr zarray shape, chunks, dtype: (1000,), (200,), float32

2025-09-18 12:28:32 - pc_concat - INFO - computing graph setted. doing all the computing.

2025-09-18 12:28:32 - pc_concat - INFO - computing finished.| 0.1s

2025-09-18 12:28:32 - pc_concat - INFO - dask cluster closed.

2025-09-18 12:28:32 - log_args - INFO - running function: pc_concat

2025-09-18 12:28:32 - log_args - INFO - fetching args:

2025-09-18 12:28:32 - log_args - INFO - pcs = 'pc/pc2'

2025-09-18 12:28:32 - log_args - INFO - pc = 'pc/pc2.zarr'

2025-09-18 12:28:32 - log_args - INFO - key = 'pc/key.zarr'

2025-09-18 12:28:32 - log_args - INFO - chunks = 200

2025-09-18 12:28:32 - log_args - INFO - processes = False

2025-09-18 12:28:32 - log_args - INFO - n_workers = 1

2025-09-18 12:28:32 - log_args - INFO - threads_per_worker = 1

2025-09-18 12:28:32 - log_args - INFO - dask_cluster_arg = {}

2025-09-18 12:28:32 - log_args - INFO - fetching args done.

2025-09-18 12:28:32 - pc_concat - INFO - input pcs: [[Path('pc/pc2/0.zarr'), Path('pc/pc2/1.zarr'), Path('pc/pc2/2.zarr'), Path('pc/pc2/3.zarr'), Path('pc/pc2/4.zarr'), Path('pc/pc2/5.zarr'), Path('pc/pc2/6.zarr'), Path('pc/pc2/7.zarr'), Path('pc/pc2/8.zarr'), Path('pc/pc2/9.zarr'), Path('pc/pc2/10.zarr'), Path('pc/pc2/11.zarr'), Path('pc/pc2/12.zarr'), Path('pc/pc2/13.zarr'), Path('pc/pc2/14.zarr'), Path('pc/pc2/15.zarr'), Path('pc/pc2/16.zarr'), Path('pc/pc2/17.zarr'), Path('pc/pc2/18.zarr'), Path('pc/pc2/19.zarr'), Path('pc/pc2/20.zarr'), Path('pc/pc2/21.zarr'), Path('pc/pc2/22.zarr'), Path('pc/pc2/23.zarr'), Path('pc/pc2/24.zarr')]]

2025-09-18 12:28:32 - pc_concat - INFO - output pc: ['pc/pc2.zarr']

2025-09-18 12:28:32 - pc_concat - INFO - load key

2025-09-18 12:28:32 - zarr_info - INFO - pc/key.zarr zarray shape, chunks, dtype: (1000,), (200,), int64

2025-09-18 12:28:32 - pc_concat - INFO - starting dask local cluster.

2025-09-18 12:28:32 - pc_concat - INFO - dask local cluster started.

2025-09-18 12:28:32 - dask_cluster_info - INFO - dask cluster: LocalCluster(dashboard_link='http://10.211.48.4:8787/status', workers=1, threads=1, memory=256.00 GiB)

2025-09-18 12:28:32 - pc_concat - INFO - read pc from [Path('pc/pc2/0.zarr'), Path('pc/pc2/1.zarr'), Path('pc/pc2/2.zarr'), Path('pc/pc2/3.zarr'), Path('pc/pc2/4.zarr'), Path('pc/pc2/5.zarr'), Path('pc/pc2/6.zarr'), Path('pc/pc2/7.zarr'), Path('pc/pc2/8.zarr'), Path('pc/pc2/9.zarr'), Path('pc/pc2/10.zarr'), Path('pc/pc2/11.zarr'), Path('pc/pc2/12.zarr'), Path('pc/pc2/13.zarr'), Path('pc/pc2/14.zarr'), Path('pc/pc2/15.zarr'), Path('pc/pc2/16.zarr'), Path('pc/pc2/17.zarr'), Path('pc/pc2/18.zarr'), Path('pc/pc2/19.zarr'), Path('pc/pc2/20.zarr'), Path('pc/pc2/21.zarr'), Path('pc/pc2/22.zarr'), Path('pc/pc2/23.zarr'), Path('pc/pc2/24.zarr')]

2025-09-18 12:28:32 - darr_info - INFO - concatenated pc dask array shape, chunksize, dtype: (1000, 3), (1000, 1), complex64

2025-09-18 12:28:32 - pc_concat - INFO - sort pc according to key

2025-09-18 12:28:32 - darr_info - INFO - sorted pc dask array shape, chunksize, dtype: (1000, 3), (1000, 1), complex64

2025-09-18 12:28:32 - pc_concat - INFO - save pc to pc/pc2.zarr

2025-09-18 12:28:32 - zarr_info - INFO - pc/pc2.zarr zarray shape, chunks, dtype: (1000, 3), (200, 1), complex64

2025-09-18 12:28:32 - pc_concat - INFO - computing graph setted. doing all the computing.

2025-09-18 12:28:33 - pc_concat - INFO - computing finished.| 0.2s

2025-09-18 12:28:33 - pc_concat - INFO - dask cluster closed.pc2ras

pc2ras (idx:str, pc:str|list, ras:str|list, shape:tuple[int], chunks:tuple[int]=(1000, 1000), processes=False, n_workers=1, threads_per_worker=1, **dask_cluster_arg)

Convert point cloud data to raster data, filled with nan

| Type | Default | Details | |

|---|---|---|---|

| idx | str | point cloud grid index or hillbert index | |

| pc | str | list | path (in string) or list of path for point cloud data | |

| ras | str | list | output, path (in string) or list of path for raster data | |

| shape | tuple | shape of one image (nlines,width) | |

| chunks | tuple | (1000, 1000) | output chunk size |

| processes | bool | False | use process for dask worker or thread |

| n_workers | int | 1 | number of dask worker |

| threads_per_worker | int | 1 | number of threads per dask worker |

| dask_cluster_arg | VAR_KEYWORD |

Usage:

pc_data1 = np.random.rand(1000).astype(np.float32)

pc_data2 = np.random.rand(1000,3).astype(np.float32)+1j*np.random.rand(1000,3).astype(np.float32)

gix = np.random.choice(np.arange(100*100,dtype=np.int32),size=1000,replace=False)

gix.sort()

gix = np.stack(np.unravel_index(gix,shape=(100,100)),axis=-1).astype(np.int32)

ras_data1 = np.zeros((100,100),dtype=np.float32)

ras_data2 = np.zeros((100,100,3),dtype=np.complex64)

ras_data1[:] = np.nan

ras_data2[:] = np.nan

ras_data1[gix[:,0],gix[:,1]] = pc_data1

ras_data2[gix[:,0],gix[:,1]] = pc_data2

gix_zarr = zarr.open('pc/gix.zarr',mode='w',shape=gix.shape,dtype=gix.dtype,chunks=(200,1))

pc_zarr1 = zarr.open('pc/pc1.zarr',mode='w',shape=pc_data1.shape,dtype=pc_data1.dtype,chunks=(200,))

pc_zarr2 = zarr.open('pc/pc2.zarr',mode='w',shape=pc_data2.shape,dtype=pc_data2.dtype,chunks=(200,1))

gix_zarr[:] = gix

pc_zarr1[:] = pc_data1

pc_zarr2[:] = pc_data2pc2ras('pc/gix.zarr','pc/pc1.zarr','pc/ras1.zarr',shape=(100,100),chunks=(20,100))

ras_zarr1 = zarr.open('pc/ras1.zarr',mode='r')

np.testing.assert_array_equal(ras_data1,ras_zarr1[:])

pc2ras('pc/gix.zarr',['pc/pc1.zarr','pc/pc2.zarr'],['pc/ras1.zarr','pc/ras2.zarr'],shape=(100,100),chunks=(20,100))

ras_zarr1 = zarr.open('pc/ras1.zarr',mode='r')

ras_zarr2 = zarr.open('pc/ras2.zarr',mode='r')

np.testing.assert_array_equal(ras_data1,ras_zarr1[:])

np.testing.assert_array_equal(ras_data2,ras_zarr2[:])2025-09-18 12:28:34 - log_args - INFO - running function: pc2ras

2025-09-18 12:28:34 - log_args - INFO - fetching args:

2025-09-18 12:28:34 - log_args - INFO - idx = 'pc/gix.zarr'

2025-09-18 12:28:34 - log_args - INFO - pc = 'pc/pc1.zarr'

2025-09-18 12:28:34 - log_args - INFO - ras = 'pc/ras1.zarr'

2025-09-18 12:28:34 - log_args - INFO - shape = (100, 100)

2025-09-18 12:28:34 - log_args - INFO - chunks = (20, 100)

2025-09-18 12:28:34 - log_args - INFO - processes = False

2025-09-18 12:28:34 - log_args - INFO - n_workers = 1

2025-09-18 12:28:34 - log_args - INFO - threads_per_worker = 1

2025-09-18 12:28:34 - log_args - INFO - dask_cluster_arg = {}

2025-09-18 12:28:34 - log_args - INFO - fetching args done.

2025-09-18 12:28:34 - zarr_info - INFO - pc/gix.zarr zarray shape, chunks, dtype: (1000, 2), (200, 1), int32

2025-09-18 12:28:34 - pc2ras - INFO - loading gix into memory.

2025-09-18 12:28:34 - pc2ras - INFO - starting dask local cluster.

2025-09-18 12:28:34 - pc2ras - INFO - dask local cluster started.

2025-09-18 12:28:34 - dask_cluster_info - INFO - dask cluster: LocalCluster(dashboard_link='http://10.211.48.4:8787/status', workers=1, threads=1, memory=256.00 GiB)

2025-09-18 12:28:34 - pc2ras - INFO - start to work on pc/pc1.zarr

2025-09-18 12:28:34 - zarr_info - INFO - pc/pc1.zarr zarray shape, chunks, dtype: (1000,), (200,), float32

2025-09-18 12:28:34 - darr_info - INFO - pc dask array shape, chunksize, dtype: (1000,), (1000,), float32

2025-09-18 12:28:34 - pc2ras - INFO - create ras dask array

2025-09-18 12:28:34 - darr_info - INFO - ras dask array shape, chunksize, dtype: (100, 100), (100, 100), float32

2025-09-18 12:28:34 - pc2ras - INFO - save ras to pc/ras1.zarr

2025-09-18 12:28:34 - zarr_info - INFO - pc/ras1.zarr zarray shape, chunks, dtype: (100, 100), (20, 100), float32

2025-09-18 12:28:34 - pc2ras - INFO - computing graph setted. doing all the computing.

2025-09-18 12:28:34 - pc2ras - INFO - computing finished.ed | 0.1s

2025-09-18 12:28:34 - pc2ras - INFO - dask cluster closed.

2025-09-18 12:28:34 - log_args - INFO - running function: pc2ras

2025-09-18 12:28:34 - log_args - INFO - fetching args:

2025-09-18 12:28:34 - log_args - INFO - idx = 'pc/gix.zarr'

2025-09-18 12:28:34 - log_args - INFO - pc = ['pc/pc1.zarr', 'pc/pc2.zarr']

2025-09-18 12:28:34 - log_args - INFO - ras = ['pc/ras1.zarr', 'pc/ras2.zarr']

2025-09-18 12:28:34 - log_args - INFO - shape = (100, 100)

2025-09-18 12:28:34 - log_args - INFO - chunks = (20, 100)

2025-09-18 12:28:34 - log_args - INFO - processes = False

2025-09-18 12:28:34 - log_args - INFO - n_workers = 1

2025-09-18 12:28:34 - log_args - INFO - threads_per_worker = 1

2025-09-18 12:28:34 - log_args - INFO - dask_cluster_arg = {}

2025-09-18 12:28:34 - log_args - INFO - fetching args done.

2025-09-18 12:28:34 - zarr_info - INFO - pc/gix.zarr zarray shape, chunks, dtype: (1000, 2), (200, 1), int32

2025-09-18 12:28:34 - pc2ras - INFO - loading gix into memory.

2025-09-18 12:28:34 - pc2ras - INFO - starting dask local cluster.

2025-09-18 12:28:34 - pc2ras - INFO - dask local cluster started.

2025-09-18 12:28:34 - dask_cluster_info - INFO - dask cluster: LocalCluster(dashboard_link='http://10.211.48.4:8787/status', workers=1, threads=1, memory=256.00 GiB)

2025-09-18 12:28:34 - pc2ras - INFO - start to work on pc/pc1.zarr

2025-09-18 12:28:34 - zarr_info - INFO - pc/pc1.zarr zarray shape, chunks, dtype: (1000,), (200,), float32

2025-09-18 12:28:34 - darr_info - INFO - pc dask array shape, chunksize, dtype: (1000,), (1000,), float32

2025-09-18 12:28:34 - pc2ras - INFO - create ras dask array

2025-09-18 12:28:34 - darr_info - INFO - ras dask array shape, chunksize, dtype: (100, 100), (100, 100), float32

2025-09-18 12:28:34 - pc2ras - INFO - save ras to pc/ras1.zarr

2025-09-18 12:28:34 - zarr_info - INFO - pc/ras1.zarr zarray shape, chunks, dtype: (100, 100), (20, 100), float32

2025-09-18 12:28:34 - pc2ras - INFO - start to work on pc/pc2.zarr

2025-09-18 12:28:34 - zarr_info - INFO - pc/pc2.zarr zarray shape, chunks, dtype: (1000, 3), (200, 1), complex64

2025-09-18 12:28:34 - darr_info - INFO - pc dask array shape, chunksize, dtype: (1000, 3), (1000, 1), complex64

2025-09-18 12:28:34 - pc2ras - INFO - create ras dask array

2025-09-18 12:28:34 - darr_info - INFO - ras dask array shape, chunksize, dtype: (100, 100, 3), (100, 100, 1), complex64

2025-09-18 12:28:34 - pc2ras - INFO - save ras to pc/ras2.zarr

2025-09-18 12:28:34 - zarr_info - INFO - pc/ras2.zarr zarray shape, chunks, dtype: (100, 100, 3), (20, 100, 1), complex64

2025-09-18 12:28:34 - pc2ras - INFO - computing graph setted. doing all the computing.

2025-09-18 12:28:34 - pc2ras - INFO - computing finished.ed | 0.1s

2025-09-18 12:28:34 - pc2ras - INFO - dask cluster closed.pc_hix

pc_hix (gix:str, hix:str, shape:tuple)

Compute the hillbert index from grid index for point cloud data.

| Type | Details | |

|---|---|---|

| gix | str | grid index |

| hix | str | output, path |

| shape | tuple | (nlines, width) |

Usage:

bbox = [0,0,100,100]

gix = np.random.choice(np.arange(100*100,dtype=np.int32),size=1000,replace=False)

gix.sort()

gix = np.stack(np.unravel_index(gix,shape=(100,100)),axis=-1).astype(np.int32)

gix_zarr = zarr.open('pc/gix.zarr',mode='w',shape=gix.shape, chunks=(100,1),dtype=gix.dtype)

gix_zarr[:] = gixpc_hix('pc/gix.zarr', 'pc/hix.zarr',shape=(100,100))2025-09-18 12:28:34 - log_args - INFO - running function: pc_hix

2025-09-18 12:28:34 - log_args - INFO - fetching args:

2025-09-18 12:28:34 - log_args - INFO - gix = 'pc/gix.zarr'

2025-09-18 12:28:34 - log_args - INFO - hix = 'pc/hix.zarr'

2025-09-18 12:28:34 - log_args - INFO - shape = (100, 100)

2025-09-18 12:28:34 - log_args - INFO - fetching args done.

2025-09-18 12:28:34 - zarr_info - INFO - pc/gix.zarr zarray shape, chunks, dtype: (1000, 2), (100, 1), int32

2025-09-18 12:28:34 - zarr_info - INFO - pc/hix.zarr zarray shape, chunks, dtype: (1000,), (100,), int64

2025-09-18 12:28:34 - pc_hix - INFO - calculating the hillbert index based on grid index

2025-09-18 12:28:36 - pc_hix - INFO - writing the hillbert index

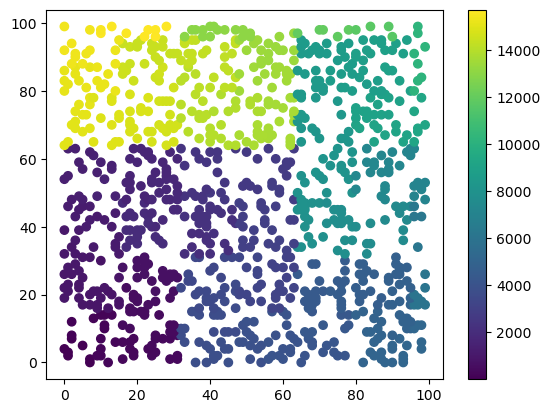

2025-09-18 12:28:36 - pc_hix - INFO - done.hix = zarr.open('pc/hix.zarr',mode='r')[:]

plt.scatter(gix[:,1], gix[:,0], c=hix)

plt.colorbar()

plt.show()pc_gix

pc_gix (hix:str, gix:str, shape:tuple)

Compute the hillbert index from grid index for point cloud data.

| Type | Details | |

|---|---|---|

| hix | str | grid index |

| gix | str | output, path |

| shape | tuple | (nlines, width) |

bbox = [0,0,100,100]

gix = np.random.choice(np.arange(100*100,dtype=np.int32),size=1000,replace=False)

gix.sort()

gix = np.stack(np.unravel_index(gix,shape=(100,100)),axis=-1).astype(np.int32)

gix_zarr = zarr.open('pc/gix.zarr',mode='w',shape=gix.shape, chunks=(100,1),dtype=gix.dtype)

gix_zarr[:] = gix

pc_hix('pc/gix.zarr', 'pc/hix.zarr',shape=(100,100))

pc_gix('pc/hix.zarr','pc/gix_.zarr', (100,100))

np.testing.assert_array_equal(zarr.open('pc/gix_.zarr',mode='r')[:], gix)2025-09-18 12:28:36 - log_args - INFO - running function: pc_hix

2025-09-18 12:28:36 - log_args - INFO - fetching args:

2025-09-18 12:28:36 - log_args - INFO - gix = 'pc/gix.zarr'

2025-09-18 12:28:36 - log_args - INFO - hix = 'pc/hix.zarr'

2025-09-18 12:28:36 - log_args - INFO - shape = (100, 100)

2025-09-18 12:28:36 - log_args - INFO - fetching args done.

2025-09-18 12:28:36 - zarr_info - INFO - pc/gix.zarr zarray shape, chunks, dtype: (1000, 2), (100, 1), int32

2025-09-18 12:28:36 - zarr_info - INFO - pc/hix.zarr zarray shape, chunks, dtype: (1000,), (100,), int64

2025-09-18 12:28:36 - pc_hix - INFO - calculating the hillbert index based on grid index

2025-09-18 12:28:36 - pc_hix - INFO - writing the hillbert index

2025-09-18 12:28:36 - pc_hix - INFO - done.

2025-09-18 12:28:36 - log_args - INFO - running function: pc_gix

2025-09-18 12:28:36 - log_args - INFO - fetching args:

2025-09-18 12:28:36 - log_args - INFO - hix = 'pc/hix.zarr'

2025-09-18 12:28:36 - log_args - INFO - gix = 'pc/gix_.zarr'

2025-09-18 12:28:36 - log_args - INFO - shape = (100, 100)

2025-09-18 12:28:36 - log_args - INFO - fetching args done.

2025-09-18 12:28:36 - zarr_info - INFO - pc/hix.zarr zarray shape, chunks, dtype: (1000,), (100,), int64

2025-09-18 12:28:36 - zarr_info - INFO - pc/gix_.zarr zarray shape, chunks, dtype: (1000, 2), (100, 1), int32

2025-09-18 12:28:36 - pc_gix - INFO - calculating the grid index from hillbert index

2025-09-18 12:28:37 - pc_gix - INFO - writing the grid index

2025-09-18 12:28:37 - pc_gix - INFO - done.pc_sort

pc_sort (idx_in:str, idx:str, pc_in:str|list=None, pc:str|list=None, shape:tuple=None, chunks:int=None, key:str=None, processes=False, n_workers=1, threads_per_worker=1, **dask_cluster_arg)

Sort point cloud data according to the indices that sort idx_in.

| Type | Default | Details | |

|---|---|---|---|

| idx_in | str | the unsorted grid index or hillbert index of the input data | |

| idx | str | output, the sorted grid index or hillbert index | |

| pc_in | str | list | None | path (in string) or list of path for the input point cloud data |

| pc | str | list | None | output, path (in string) or list of path for the output point cloud data |

| shape | tuple | None | (nline, width), faster if provided for grid index input |

| chunks | int | None | chunk size in output data, same as idx_in by default |

| key | str | None | output, path (in string) for the key of sorting |

| processes | bool | False | use process for dask worker or thread |

| n_workers | int | 1 | number of dask worker |

| threads_per_worker | int | 1 | number of threads per dask worker |

| dask_cluster_arg | VAR_KEYWORD |

pc_in = np.random.rand(1000).astype(np.float32)

gix_in = np.random.choice(np.arange(100*100,dtype=np.int32),size=1000,replace=False)

gix_in = np.stack(np.unravel_index(gix_in,shape=(100,100)),axis=-1).astype(np.int32)

ind = np.lexsort((gix_in[:,1],gix_in[:,0]))

pc = pc_in[ind]; gix = gix_in[ind]

pc_in_zarr = zarr.open('pc/pc_in.zarr',mode='w',shape=pc_in.shape,dtype=pc_in.dtype,chunks=(100,))

gix_in_zarr = zarr.open('pc/gix_in.zarr',mode='w',shape=gix_in.shape,dtype=gix_in.dtype,chunks=(100,1))

pc_in_zarr[:] = pc_in; gix_in_zarr[:] = gix_inpc_sort('pc/gix_in.zarr','pc/gix.zarr','pc/pc_in.zarr','pc/pc.zarr',shape=(100,100))

pc_zarr = zarr.open('pc/pc.zarr',mode='r'); gix_zarr = zarr.open('pc/gix.zarr',mode='r')

np.testing.assert_array_equal(pc_zarr[:],pc)

np.testing.assert_array_equal(gix_zarr[:],gix)2025-09-18 12:28:55 - log_args - INFO - running function: pc_sort

2025-09-18 12:28:55 - log_args - INFO - fetching args:

2025-09-18 12:28:55 - log_args - INFO - idx_in = 'pc/gix_in.zarr'

2025-09-18 12:28:55 - log_args - INFO - idx = 'pc/gix.zarr'

2025-09-18 12:28:55 - log_args - INFO - pc_in = 'pc/pc_in.zarr'

2025-09-18 12:28:55 - log_args - INFO - pc = 'pc/pc.zarr'

2025-09-18 12:28:55 - log_args - INFO - shape = (100, 100)

2025-09-18 12:28:55 - log_args - INFO - chunks = None

2025-09-18 12:28:55 - log_args - INFO - key = None

2025-09-18 12:28:55 - log_args - INFO - processes = False

2025-09-18 12:28:55 - log_args - INFO - n_workers = 1

2025-09-18 12:28:55 - log_args - INFO - threads_per_worker = 1

2025-09-18 12:28:55 - log_args - INFO - dask_cluster_arg = {}

2025-09-18 12:28:55 - log_args - INFO - fetching args done.

2025-09-18 12:28:55 - zarr_info - INFO - pc/gix_in.zarr zarray shape, chunks, dtype: (1000, 2), (100, 1), int32

2025-09-18 12:28:55 - pc_sort - INFO - loading idx_in and calculate the sorting indices.

2025-09-18 12:28:55 - pc_sort - INFO - output pc chunk size is 100

2025-09-18 12:28:55 - pc_sort - INFO - write idx

2025-09-18 12:28:55 - zarr_info - INFO - idx zarray shape, chunks, dtype: (1000, 2), (100, 1), int32

2025-09-18 12:28:55 - pc_sort - INFO - starting dask local cluster.

2025-09-18 12:28:55 - pc_sort - INFO - dask local cluster started.

2025-09-18 12:28:55 - dask_cluster_info - INFO - dask cluster: LocalCluster(dashboard_link='http://10.211.48.4:8787/status', workers=1, threads=1, memory=256.00 GiB)

2025-09-18 12:28:55 - zarr_info - INFO - pc/pc_in.zarr zarray shape, chunks, dtype: (1000,), (100,), float32

2025-09-18 12:28:55 - darr_info - INFO - pc_in dask array shape, chunksize, dtype: (1000,), (1000,), float32

2025-09-18 12:28:55 - pc_sort - INFO - set up sorted pc data dask array.

2025-09-18 12:28:55 - darr_info - INFO - pc dask array shape, chunksize, dtype: (1000,), (1000,), float32

2025-09-18 12:28:55 - pc_sort - INFO - write pc to pc/pc.zarr

2025-09-18 12:28:55 - zarr_info - INFO - pc/pc.zarr zarray shape, chunks, dtype: (1000,), (100,), float32

2025-09-18 12:28:55 - pc_sort - INFO - computing graph setted. doing all the computing.

2025-09-18 12:28:55 - pc_sort - INFO - computing finished.d | 0.1s

2025-09-18 12:28:55 - pc_sort - INFO - dask cluster closed.pc_in = np.random.rand(1000).astype(np.float32)

hix_in = np.random.choice(np.arange(100*100,dtype=np.int64),size=1000,replace=False)

ind = np.argsort(hix_in,kind='stable')

pc = pc_in[ind]; hix = hix_in[ind]

pc_in_zarr = zarr.open('pc/pc_in.zarr',mode='w',shape=pc_in.shape,dtype=pc_in.dtype,chunks=(100,))

hix_in_zarr = zarr.open('pc/hix_in.zarr',mode='w',shape=hix_in.shape,dtype=hix_in.dtype,chunks=(100,))

pc_in_zarr[:] = pc_in; hix_in_zarr[:] = hix_in

pc_sort('pc/hix_in.zarr','pc/hix.zarr','pc/pc_in.zarr','pc/pc.zarr')

pc_zarr = zarr.open('pc/pc.zarr',mode='r'); hix_zarr = zarr.open('pc/hix.zarr',mode='r')

np.testing.assert_array_equal(pc_zarr[:],pc)

np.testing.assert_array_equal(hix_zarr[:],hix)2025-09-18 12:28:56 - log_args - INFO - running function: pc_sort

2025-09-18 12:28:56 - log_args - INFO - fetching args:

2025-09-18 12:28:56 - log_args - INFO - idx_in = 'pc/hix_in.zarr'

2025-09-18 12:28:56 - log_args - INFO - idx = 'pc/hix.zarr'

2025-09-18 12:28:56 - log_args - INFO - pc_in = 'pc/pc_in.zarr'

2025-09-18 12:28:56 - log_args - INFO - pc = 'pc/pc.zarr'

2025-09-18 12:28:56 - log_args - INFO - shape = None

2025-09-18 12:28:56 - log_args - INFO - chunks = None

2025-09-18 12:28:56 - log_args - INFO - key = None

2025-09-18 12:28:56 - log_args - INFO - processes = False

2025-09-18 12:28:56 - log_args - INFO - n_workers = 1

2025-09-18 12:28:56 - log_args - INFO - threads_per_worker = 1

2025-09-18 12:28:56 - log_args - INFO - dask_cluster_arg = {}

2025-09-18 12:28:56 - log_args - INFO - fetching args done.

2025-09-18 12:28:56 - zarr_info - INFO - pc/hix_in.zarr zarray shape, chunks, dtype: (1000,), (100,), int64

2025-09-18 12:28:56 - pc_sort - INFO - loading idx_in and calculate the sorting indices.

2025-09-18 12:28:56 - pc_sort - INFO - output pc chunk size is 100

2025-09-18 12:28:56 - pc_sort - INFO - write idx

2025-09-18 12:28:56 - zarr_info - INFO - idx zarray shape, chunks, dtype: (1000,), (100,), int64

2025-09-18 12:28:56 - pc_sort - INFO - starting dask local cluster.

2025-09-18 12:28:56 - pc_sort - INFO - dask local cluster started.

2025-09-18 12:28:56 - dask_cluster_info - INFO - dask cluster: LocalCluster(dashboard_link='http://10.211.48.4:8787/status', workers=1, threads=1, memory=256.00 GiB)

2025-09-18 12:28:56 - zarr_info - INFO - pc/pc_in.zarr zarray shape, chunks, dtype: (1000,), (100,), float32

2025-09-18 12:28:56 - darr_info - INFO - pc_in dask array shape, chunksize, dtype: (1000,), (1000,), float32

2025-09-18 12:28:56 - pc_sort - INFO - set up sorted pc data dask array.

2025-09-18 12:28:56 - darr_info - INFO - pc dask array shape, chunksize, dtype: (1000,), (1000,), float32

2025-09-18 12:28:56 - pc_sort - INFO - write pc to pc/pc.zarr

2025-09-18 12:28:56 - zarr_info - INFO - pc/pc.zarr zarray shape, chunks, dtype: (1000,), (100,), float32

2025-09-18 12:28:56 - pc_sort - INFO - computing graph setted. doing all the computing.

2025-09-18 12:28:56 - pc_sort - INFO - computing finished.d | 0.1s

2025-09-18 12:28:56 - pc_sort - INFO - dask cluster closed.pc_union

pc_union (idx1:str, idx2:str, idx:str, pc1:str|list=None, pc2:str|list=None, pc:str|list=None, shape:tuple=None, chunks:int=None, processes=False, n_workers=1, threads_per_worker=1, **dask_cluster_arg)

Get the union of two point cloud dataset. For points at their intersection, pc_data1 rather than pc_data2 is copied to the result pc_data. pc_chunk_size and n_pc_chunk are used to determine the final pc_chunk_size. If non of them are provided, the pc_chunk_size is setted as it in idx1.

| Type | Default | Details | |

|---|---|---|---|

| idx1 | str | grid index or hillbert index of the first point cloud | |

| idx2 | str | grid index or hillbert index of the second point cloud | |

| idx | str | output, grid index or hillbert index of the union point cloud | |

| pc1 | str | list | None | path (in string) or list of path for the first point cloud data |

| pc2 | str | list | None | path (in string) or list of path for the second point cloud data |

| pc | str | list | None | output, path (in string) or list of path for the union point cloud data |

| shape | tuple | None | image shape, faster if provided for grid index input |

| chunks | int | None | chunk size in output data, same as idx1 by default |

| processes | bool | False | use process for dask worker or thread |

| n_workers | int | 1 | number of dask worker |

| threads_per_worker | int | 1 | number of threads per dask worker |

| dask_cluster_arg | VAR_KEYWORD |

Usage:

pc_data1 = np.random.rand(1000,3).astype(np.float32)+1j*np.random.rand(1000,3).astype(np.float32)

pc_data2 = np.random.rand(800,3).astype(np.float32)+1j*np.random.rand(800,3).astype(np.float32)

gix1 = np.random.choice(np.arange(100*100,dtype=np.int32),size=1000,replace=False)

gix1.sort()

gix1 = np.stack(np.unravel_index(gix1,shape=(100,100)),axis=-1).astype(np.int32)

gix2 = np.random.choice(np.arange(100*100,dtype=np.int32),size=800,replace=False)

gix2.sort()

gix2 = np.stack(np.unravel_index(gix2,shape=(100,100)),axis=-1).astype(np.int32)

gix, inv_iidx1, inv_iidx2, iidx2 = mr.pc_union(gix1,gix2)

pc_data = np.empty((gix.shape[0],*pc_data1.shape[1:]),dtype=pc_data1.dtype)

pc_data[inv_iidx1] = pc_data1

pc_data[inv_iidx2] = pc_data2[iidx2]

gix1_zarr = zarr.open('pc/gix1.zarr',mode='w',shape=gix1.shape,dtype=gix1.dtype,chunks=(200,1))

gix2_zarr = zarr.open('pc/gix2.zarr',mode='w',shape=gix2.shape,dtype=gix2.dtype,chunks=(200,1))

pc1_zarr = zarr.open('pc/pc1.zarr',mode='w',shape=pc_data1.shape,dtype=pc_data1.dtype,chunks=(200,1))

pc2_zarr = zarr.open('pc/pc2.zarr',mode='w',shape=pc_data2.shape,dtype=pc_data2.dtype,chunks=(200,1))

gix1_zarr[:] = gix1

gix2_zarr[:] = gix2

pc1_zarr[:] = pc_data1

pc2_zarr[:] = pc_data2pc_union('pc/gix1.zarr','pc/gix2.zarr','pc/gix.zarr', shape=(100,100))

pc_union('pc/gix1.zarr','pc/gix2.zarr','pc/gix.zarr','pc/pc1.zarr','pc/pc2.zarr','pc/pc.zarr')

gix_zarr = zarr.open('pc/gix.zarr',mode='r')

pc_zarr = zarr.open('pc/pc.zarr',mode='r')

np.testing.assert_array_equal(gix_zarr[:],gix)

np.testing.assert_array_equal(pc_zarr[:],pc_data)2025-09-18 12:28:58 - log_args - INFO - running function: pc_union

2025-09-18 12:28:58 - log_args - INFO - fetching args:

2025-09-18 12:28:58 - log_args - INFO - idx1 = 'pc/gix1.zarr'

2025-09-18 12:28:58 - log_args - INFO - idx2 = 'pc/gix2.zarr'

2025-09-18 12:28:58 - log_args - INFO - idx = 'pc/gix.zarr'

2025-09-18 12:28:58 - log_args - INFO - pc1 = None

2025-09-18 12:28:58 - log_args - INFO - pc2 = None

2025-09-18 12:28:58 - log_args - INFO - pc = None

2025-09-18 12:28:58 - log_args - INFO - shape = (100, 100)

2025-09-18 12:28:58 - log_args - INFO - chunks = None

2025-09-18 12:28:58 - log_args - INFO - processes = False

2025-09-18 12:28:58 - log_args - INFO - n_workers = 1

2025-09-18 12:28:58 - log_args - INFO - threads_per_worker = 1

2025-09-18 12:28:58 - log_args - INFO - dask_cluster_arg = {}

2025-09-18 12:28:58 - log_args - INFO - fetching args done.

2025-09-18 12:28:58 - zarr_info - INFO - pc/gix1.zarr zarray shape, chunks, dtype: (1000, 2), (200, 1), int32

2025-09-18 12:28:58 - zarr_info - INFO - pc/gix2.zarr zarray shape, chunks, dtype: (800, 2), (200, 1), int32

2025-09-18 12:28:58 - pc_union - INFO - loading idx1 and idx2 into memory.

2025-09-18 12:28:58 - pc_union - INFO - calculate the union

2025-09-18 12:28:58 - pc_union - INFO - number of points in the union: 1725

2025-09-18 12:28:58 - pc_union - INFO - write union idx

2025-09-18 12:28:59 - pc_union - INFO - write done

2025-09-18 12:28:59 - zarr_info - INFO - pc/gix.zarr zarray shape, chunks, dtype: (1725, 2), (200, 1), int32

2025-09-18 12:28:59 - pc_union - INFO - no point cloud data provided, exit.

2025-09-18 12:28:59 - log_args - INFO - running function: pc_union

2025-09-18 12:28:59 - log_args - INFO - fetching args:

2025-09-18 12:28:59 - log_args - INFO - idx1 = 'pc/gix1.zarr'

2025-09-18 12:28:59 - log_args - INFO - idx2 = 'pc/gix2.zarr'

2025-09-18 12:28:59 - log_args - INFO - idx = 'pc/gix.zarr'

2025-09-18 12:28:59 - log_args - INFO - pc1 = 'pc/pc1.zarr'

2025-09-18 12:28:59 - log_args - INFO - pc2 = 'pc/pc2.zarr'

2025-09-18 12:28:59 - log_args - INFO - pc = 'pc/pc.zarr'

2025-09-18 12:28:59 - log_args - INFO - shape = None

2025-09-18 12:28:59 - log_args - INFO - chunks = None

2025-09-18 12:28:59 - log_args - INFO - processes = False

2025-09-18 12:28:59 - log_args - INFO - n_workers = 1

2025-09-18 12:28:59 - log_args - INFO - threads_per_worker = 1

2025-09-18 12:28:59 - log_args - INFO - dask_cluster_arg = {}

2025-09-18 12:28:59 - log_args - INFO - fetching args done.

2025-09-18 12:28:59 - zarr_info - INFO - pc/gix1.zarr zarray shape, chunks, dtype: (1000, 2), (200, 1), int32

2025-09-18 12:28:59 - zarr_info - INFO - pc/gix2.zarr zarray shape, chunks, dtype: (800, 2), (200, 1), int32

2025-09-18 12:28:59 - pc_union - INFO - loading idx1 and idx2 into memory.

2025-09-18 12:28:59 - pc_union - INFO - calculate the union

2025-09-18 12:28:59 - pc_union - INFO - number of points in the union: 1725

2025-09-18 12:28:59 - pc_union - INFO - write union idx

2025-09-18 12:28:59 - pc_union - INFO - write done

2025-09-18 12:28:59 - zarr_info - INFO - pc/gix.zarr zarray shape, chunks, dtype: (1725, 2), (200, 1), int32

2025-09-18 12:28:59 - pc_union - INFO - starting dask local cluster.

2025-09-18 12:28:59 - pc_union - INFO - dask local cluster started.

2025-09-18 12:28:59 - dask_cluster_info - INFO - dask cluster: LocalCluster(dashboard_link='http://10.211.48.4:8787/status', workers=1, threads=1, memory=256.00 GiB)

2025-09-18 12:28:59 - zarr_info - INFO - pc/pc1.zarr zarray shape, chunks, dtype: (1000, 3), (200, 1), complex64

2025-09-18 12:28:59 - zarr_info - INFO - pc/pc2.zarr zarray shape, chunks, dtype: (800, 3), (200, 1), complex64

2025-09-18 12:28:59 - darr_info - INFO - pc1 dask array shape, chunksize, dtype: (1000, 3), (1000, 1), complex64

2025-09-18 12:28:59 - darr_info - INFO - pc2 dask array shape, chunksize, dtype: (800, 3), (800, 1), complex64

2025-09-18 12:28:59 - pc_union - INFO - set up union pc data dask array.

2025-09-18 12:28:59 - darr_info - INFO - pc dask array shape, chunksize, dtype: (1725, 3), (1725, 1), complex64

2025-09-18 12:28:59 - pc_union - INFO - write pc to pc/pc.zarr

2025-09-18 12:28:59 - zarr_info - INFO - pc/pc.zarr zarray shape, chunks, dtype: (1725, 3), (200, 1), complex64

2025-09-18 12:28:59 - pc_union - INFO - computing graph setted. doing all the computing.

2025-09-18 12:28:59 - pc_union - INFO - computing finished. | 0.2s

2025-09-18 12:28:59 - pc_union - INFO - dask cluster closed.pc_data1 = np.random.rand(1000,3).astype(np.float32)+1j*np.random.rand(1000,3).astype(np.float32)

pc_data2 = np.random.rand(800,3).astype(np.float32)+1j*np.random.rand(800,3).astype(np.float32)

hix1 = np.random.choice(np.arange(100*100,dtype=np.int64),size=1000,replace=False)

hix1.sort()

hix2 = np.random.choice(np.arange(100*100,dtype=np.int64),size=800,replace=False)

hix2.sort()

hix, inv_iidx1, inv_iidx2, iidx2 = mr.pc_union(hix1,hix2)

pc_data = np.empty((hix.shape[0],*pc_data1.shape[1:]),dtype=pc_data1.dtype)

pc_data[inv_iidx1] = pc_data1

pc_data[inv_iidx2] = pc_data2[iidx2]

hix1_zarr = zarr.open('pc/hix1.zarr',mode='w',shape=hix1.shape,dtype=hix1.dtype,chunks=(200,))

hix2_zarr = zarr.open('pc/hix2.zarr',mode='w',shape=hix2.shape,dtype=hix2.dtype,chunks=(200,))

pc1_zarr = zarr.open('pc/pc1.zarr',mode='w',shape=pc_data1.shape,dtype=pc_data1.dtype,chunks=(200,1))

pc2_zarr = zarr.open('pc/pc2.zarr',mode='w',shape=pc_data2.shape,dtype=pc_data2.dtype,chunks=(200,1))

hix1_zarr[:] = hix1

hix2_zarr[:] = hix2

pc1_zarr[:] = pc_data1

pc2_zarr[:] = pc_data2pc_union('pc/hix1.zarr','pc/hix2.zarr','pc/hix.zarr')

pc_union('pc/hix1.zarr','pc/hix2.zarr','pc/hix.zarr','pc/pc1.zarr','pc/pc2.zarr','pc/pc.zarr')

hix_zarr = zarr.open('pc/hix.zarr',mode='r')

pc_zarr = zarr.open('pc/pc.zarr',mode='r')

np.testing.assert_array_equal(hix_zarr[:],hix)

np.testing.assert_array_equal(pc_zarr[:],pc_data)2025-09-18 12:29:00 - log_args - INFO - running function: pc_union

2025-09-18 12:29:00 - log_args - INFO - fetching args:

2025-09-18 12:29:00 - log_args - INFO - idx1 = 'pc/hix1.zarr'

2025-09-18 12:29:00 - log_args - INFO - idx2 = 'pc/hix2.zarr'

2025-09-18 12:29:00 - log_args - INFO - idx = 'pc/hix.zarr'

2025-09-18 12:29:00 - log_args - INFO - pc1 = None

2025-09-18 12:29:00 - log_args - INFO - pc2 = None

2025-09-18 12:29:00 - log_args - INFO - pc = None

2025-09-18 12:29:00 - log_args - INFO - shape = None

2025-09-18 12:29:00 - log_args - INFO - chunks = None

2025-09-18 12:29:00 - log_args - INFO - processes = False

2025-09-18 12:29:00 - log_args - INFO - n_workers = 1

2025-09-18 12:29:00 - log_args - INFO - threads_per_worker = 1

2025-09-18 12:29:00 - log_args - INFO - dask_cluster_arg = {}

2025-09-18 12:29:00 - log_args - INFO - fetching args done.

2025-09-18 12:29:00 - zarr_info - INFO - pc/hix1.zarr zarray shape, chunks, dtype: (1000,), (200,), int64

2025-09-18 12:29:00 - zarr_info - INFO - pc/hix2.zarr zarray shape, chunks, dtype: (800,), (200,), int64

2025-09-18 12:29:00 - pc_union - INFO - loading idx1 and idx2 into memory.

2025-09-18 12:29:00 - pc_union - INFO - calculate the union

2025-09-18 12:29:00 - pc_union - INFO - number of points in the union: 1720

2025-09-18 12:29:00 - pc_union - INFO - write union idx

2025-09-18 12:29:00 - pc_union - INFO - write done

2025-09-18 12:29:00 - zarr_info - INFO - pc/hix.zarr zarray shape, chunks, dtype: (1720,), (200,), int64

2025-09-18 12:29:00 - pc_union - INFO - no point cloud data provided, exit.

2025-09-18 12:29:00 - log_args - INFO - running function: pc_union

2025-09-18 12:29:00 - log_args - INFO - fetching args:

2025-09-18 12:29:00 - log_args - INFO - idx1 = 'pc/hix1.zarr'

2025-09-18 12:29:00 - log_args - INFO - idx2 = 'pc/hix2.zarr'

2025-09-18 12:29:00 - log_args - INFO - idx = 'pc/hix.zarr'

2025-09-18 12:29:00 - log_args - INFO - pc1 = 'pc/pc1.zarr'

2025-09-18 12:29:00 - log_args - INFO - pc2 = 'pc/pc2.zarr'

2025-09-18 12:29:00 - log_args - INFO - pc = 'pc/pc.zarr'

2025-09-18 12:29:00 - log_args - INFO - shape = None

2025-09-18 12:29:00 - log_args - INFO - chunks = None

2025-09-18 12:29:00 - log_args - INFO - processes = False

2025-09-18 12:29:00 - log_args - INFO - n_workers = 1

2025-09-18 12:29:00 - log_args - INFO - threads_per_worker = 1

2025-09-18 12:29:00 - log_args - INFO - dask_cluster_arg = {}

2025-09-18 12:29:00 - log_args - INFO - fetching args done.

2025-09-18 12:29:00 - zarr_info - INFO - pc/hix1.zarr zarray shape, chunks, dtype: (1000,), (200,), int64

2025-09-18 12:29:00 - zarr_info - INFO - pc/hix2.zarr zarray shape, chunks, dtype: (800,), (200,), int64

2025-09-18 12:29:00 - pc_union - INFO - loading idx1 and idx2 into memory.

2025-09-18 12:29:00 - pc_union - INFO - calculate the union

2025-09-18 12:29:00 - pc_union - INFO - number of points in the union: 1720

2025-09-18 12:29:00 - pc_union - INFO - write union idx

2025-09-18 12:29:00 - pc_union - INFO - write done

2025-09-18 12:29:00 - zarr_info - INFO - pc/hix.zarr zarray shape, chunks, dtype: (1720,), (200,), int64

2025-09-18 12:29:00 - pc_union - INFO - starting dask local cluster.

2025-09-18 12:29:00 - pc_union - INFO - dask local cluster started.

2025-09-18 12:29:00 - dask_cluster_info - INFO - dask cluster: LocalCluster(dashboard_link='http://10.211.48.4:8787/status', workers=1, threads=1, memory=256.00 GiB)

2025-09-18 12:29:00 - zarr_info - INFO - pc/pc1.zarr zarray shape, chunks, dtype: (1000, 3), (200, 1), complex64

2025-09-18 12:29:00 - zarr_info - INFO - pc/pc2.zarr zarray shape, chunks, dtype: (800, 3), (200, 1), complex64

2025-09-18 12:29:00 - darr_info - INFO - pc1 dask array shape, chunksize, dtype: (1000, 3), (1000, 1), complex64

2025-09-18 12:29:00 - darr_info - INFO - pc2 dask array shape, chunksize, dtype: (800, 3), (800, 1), complex64

2025-09-18 12:29:00 - pc_union - INFO - set up union pc data dask array.

2025-09-18 12:29:00 - darr_info - INFO - pc dask array shape, chunksize, dtype: (1720, 3), (1720, 1), complex64

2025-09-18 12:29:00 - pc_union - INFO - write pc to pc/pc.zarr

2025-09-18 12:29:00 - zarr_info - INFO - pc/pc.zarr zarray shape, chunks, dtype: (1720, 3), (200, 1), complex64

2025-09-18 12:29:00 - pc_union - INFO - computing graph setted. doing all the computing.

2025-09-18 12:29:00 - pc_union - INFO - computing finished. | 0.2s

2025-09-18 12:29:00 - pc_union - INFO - dask cluster closed.pc_intersect

pc_intersect (idx1:str, idx2:str, idx:str, pc1:str|list=None, pc2:str|list=None, pc:str|list=None, shape:tuple=None, chunks:int=None, prefer_1=True, processes=False, n_workers=1, threads_per_worker=1, **dask_cluster_arg)

Get the intersection of two point cloud dataset. pc_chunk_size and n_pc_chunk are used to determine the final pc_chunk_size. If non of them are provided, the n_pc_chunk is set to n_chunk in idx1.

| Type | Default | Details | |

|---|---|---|---|

| idx1 | str | grid index or hillbert index of the first point cloud | |

| idx2 | str | grid index or hillbert index of the second point cloud | |

| idx | str | output, grid index or hillbert index of the union point cloud | |

| pc1 | str | list | None | path (in string) or list of path for the first point cloud data |

| pc2 | str | list | None | path (in string) or list of path for the second point cloud data |

| pc | str | list | None | output, path (in string) or list of path for the union point cloud data |

| shape | tuple | None | image shape, faster if provided for grid index input |

| chunks | int | None | chunk size in output data, same as idx1 by default |

| prefer_1 | bool | True | save pc1 on intersection to output pc dataset by default True. Otherwise, save data from pc2 |

| processes | bool | False | use process for dask worker or thread |

| n_workers | int | 1 | number of dask worker |

| threads_per_worker | int | 1 | number of threads per dask worker |

| dask_cluster_arg | VAR_KEYWORD |

Usage:

pc_data1 = np.random.rand(1000,3).astype(np.float32)+1j*np.random.rand(1000,3).astype(np.float32)

pc_data2 = np.random.rand(800,3).astype(np.float32)+1j*np.random.rand(800,3).astype(np.float32)

gix1 = np.random.choice(np.arange(100*100,dtype=np.int32),size=1000,replace=False)

gix1.sort()

gix1 = np.stack(np.unravel_index(gix1,shape=(100,100)),axis=-1).astype(np.int32)

gix2 = np.random.choice(np.arange(100*100,dtype=np.int32),size=800,replace=False)

gix2.sort()

gix2 = np.stack(np.unravel_index(gix2,shape=(100,100)),axis=-1).astype(np.int32)

gix, iidx1, iidx2 = mr.pc_intersect(gix1,gix2)

pc_data = np.empty((gix.shape[0],*pc_data1.shape[1:]),dtype=pc_data1.dtype)

pc_data[:] = pc_data2[iidx2]

gix1_zarr = zarr.open('pc/gix1.zarr',mode='w',shape=gix1.shape,dtype=gix1.dtype,chunks=(200,1))

gix2_zarr = zarr.open('pc/gix2.zarr',mode='w',shape=gix2.shape,dtype=gix2.dtype,chunks=(200,1))

pc1_zarr = zarr.open('pc/pc1.zarr',mode='w',shape=pc_data1.shape,dtype=pc_data1.dtype,chunks=(200,1))

pc2_zarr = zarr.open('pc/pc2.zarr',mode='w',shape=pc_data2.shape,dtype=pc_data2.dtype,chunks=(200,1))

gix1_zarr[:] = gix1

gix2_zarr[:] = gix2

pc1_zarr[:] = pc_data1

pc2_zarr[:] = pc_data2pc_intersect('pc/gix1.zarr','pc/gix2.zarr','pc/gix.zarr', shape=(100,100))

pc_intersect('pc/gix1.zarr','pc/gix2.zarr','pc/gix.zarr',pc2='pc/pc2.zarr', pc='pc/pc.zarr',prefer_1=False)

gix_zarr = zarr.open('pc/gix.zarr',mode='r')

pc_zarr = zarr.open('pc/pc.zarr',mode='r')

np.testing.assert_array_equal(gix_zarr[:],gix)

np.testing.assert_array_equal(pc_zarr[:],pc_data)2025-09-18 12:29:02 - log_args - INFO - running function: pc_intersect

2025-09-18 12:29:02 - log_args - INFO - fetching args:

2025-09-18 12:29:02 - log_args - INFO - idx1 = 'pc/gix1.zarr'

2025-09-18 12:29:02 - log_args - INFO - idx2 = 'pc/gix2.zarr'

2025-09-18 12:29:02 - log_args - INFO - idx = 'pc/gix.zarr'

2025-09-18 12:29:02 - log_args - INFO - pc1 = None

2025-09-18 12:29:02 - log_args - INFO - pc2 = None

2025-09-18 12:29:02 - log_args - INFO - pc = None

2025-09-18 12:29:02 - log_args - INFO - shape = (100, 100)

2025-09-18 12:29:02 - log_args - INFO - chunks = None

2025-09-18 12:29:02 - log_args - INFO - prefer_1 = True

2025-09-18 12:29:02 - log_args - INFO - processes = False

2025-09-18 12:29:02 - log_args - INFO - n_workers = 1

2025-09-18 12:29:02 - log_args - INFO - threads_per_worker = 1

2025-09-18 12:29:02 - log_args - INFO - dask_cluster_arg = {}

2025-09-18 12:29:02 - log_args - INFO - fetching args done.

2025-09-18 12:29:02 - zarr_info - INFO - pc/gix1.zarr zarray shape, chunks, dtype: (1000, 2), (200, 1), int32

2025-09-18 12:29:02 - zarr_info - INFO - pc/gix2.zarr zarray shape, chunks, dtype: (800, 2), (200, 1), int32

2025-09-18 12:29:02 - pc_intersect - INFO - loading idx1 and idx2 into memory.

2025-09-18 12:29:02 - pc_intersect - INFO - calculate the intersection

2025-09-18 12:29:02 - pc_intersect - INFO - number of points in the intersection: 84

2025-09-18 12:29:02 - pc_intersect - INFO - write intersect idx

2025-09-18 12:29:02 - pc_intersect - INFO - write done

2025-09-18 12:29:02 - zarr_info - INFO - pc/gix.zarr zarray shape, chunks, dtype: (84, 2), (200, 1), int32

2025-09-18 12:29:02 - pc_intersect - INFO - no point cloud data provided, exit.

2025-09-18 12:29:02 - log_args - INFO - running function: pc_intersect

2025-09-18 12:29:02 - log_args - INFO - fetching args:

2025-09-18 12:29:02 - log_args - INFO - idx1 = 'pc/gix1.zarr'

2025-09-18 12:29:02 - log_args - INFO - idx2 = 'pc/gix2.zarr'

2025-09-18 12:29:02 - log_args - INFO - idx = 'pc/gix.zarr'

2025-09-18 12:29:02 - log_args - INFO - pc1 = None

2025-09-18 12:29:02 - log_args - INFO - pc2 = 'pc/pc2.zarr'

2025-09-18 12:29:02 - log_args - INFO - pc = 'pc/pc.zarr'

2025-09-18 12:29:02 - log_args - INFO - shape = None

2025-09-18 12:29:02 - log_args - INFO - chunks = None

2025-09-18 12:29:02 - log_args - INFO - prefer_1 = False

2025-09-18 12:29:02 - log_args - INFO - processes = False

2025-09-18 12:29:02 - log_args - INFO - n_workers = 1

2025-09-18 12:29:02 - log_args - INFO - threads_per_worker = 1

2025-09-18 12:29:02 - log_args - INFO - dask_cluster_arg = {}

2025-09-18 12:29:02 - log_args - INFO - fetching args done.

2025-09-18 12:29:02 - zarr_info - INFO - pc/gix1.zarr zarray shape, chunks, dtype: (1000, 2), (200, 1), int32

2025-09-18 12:29:02 - zarr_info - INFO - pc/gix2.zarr zarray shape, chunks, dtype: (800, 2), (200, 1), int32

2025-09-18 12:29:02 - pc_intersect - INFO - loading idx1 and idx2 into memory.

2025-09-18 12:29:02 - pc_intersect - INFO - calculate the intersection

2025-09-18 12:29:02 - pc_intersect - INFO - number of points in the intersection: 84

2025-09-18 12:29:02 - pc_intersect - INFO - write intersect idx

2025-09-18 12:29:02 - pc_intersect - INFO - write done

2025-09-18 12:29:02 - zarr_info - INFO - pc/gix.zarr zarray shape, chunks, dtype: (84, 2), (200, 1), int32

2025-09-18 12:29:02 - pc_intersect - INFO - select pc2 as pc_input.

2025-09-18 12:29:02 - pc_intersect - INFO - starting dask local cluster.

2025-09-18 12:29:02 - pc_intersect - INFO - dask local cluster started.

2025-09-18 12:29:02 - dask_cluster_info - INFO - dask cluster: LocalCluster(dashboard_link='http://10.211.48.4:8787/status', workers=1, threads=1, memory=256.00 GiB)

2025-09-18 12:29:02 - zarr_info - INFO - pc/pc2.zarr zarray shape, chunks, dtype: (800, 3), (200, 1), complex64

2025-09-18 12:29:02 - darr_info - INFO - pc_input dask array shape, chunksize, dtype: (800, 3), (800, 1), complex64

2025-09-18 12:29:02 - pc_intersect - INFO - set up intersect pc data dask array.

2025-09-18 12:29:02 - darr_info - INFO - pc dask array shape, chunksize, dtype: (84, 3), (84, 1), complex64

2025-09-18 12:29:02 - pc_intersect - INFO - write pc to pc/pc.zarr

2025-09-18 12:29:02 - zarr_info - INFO - pc/pc.zarr zarray shape, chunks, dtype: (84, 3), (200, 1), complex64

2025-09-18 12:29:02 - pc_intersect - INFO - computing graph setted. doing all the computing.

2025-09-18 12:29:03 - pc_intersect - INFO - computing finished.0.1s

2025-09-18 12:29:03 - pc_intersect - INFO - dask cluster closed.pc_data1 = np.random.rand(1000,3).astype(np.float32)+1j*np.random.rand(1000,3).astype(np.float32)

pc_data2 = np.random.rand(800,3).astype(np.float32)+1j*np.random.rand(800,3).astype(np.float32)

hix1 = np.random.choice(np.arange(100*100,dtype=np.int64),size=1000,replace=False)

hix1.sort()

hix2 = np.random.choice(np.arange(100*100,dtype=np.int64),size=800,replace=False)

hix2.sort()

hix, iidx1, iidx2 = mr.pc_intersect(hix1,hix2)

pc_data = np.empty((hix.shape[-1],*pc_data1.shape[1:]),dtype=pc_data1.dtype)

pc_data[:] = pc_data2[iidx2]

hix1_zarr = zarr.open('pc/hix1.zarr',mode='w',shape=hix1.shape,dtype=hix1.dtype,chunks=(200,))

hix2_zarr = zarr.open('pc/hix2.zarr',mode='w',shape=hix2.shape,dtype=hix2.dtype,chunks=(200,))

pc1_zarr = zarr.open('pc/pc1.zarr',mode='w',shape=pc_data1.shape,dtype=pc_data1.dtype,chunks=(200,1))

pc2_zarr = zarr.open('pc/pc2.zarr',mode='w',shape=pc_data2.shape,dtype=pc_data2.dtype,chunks=(200,1))

hix1_zarr[:] = hix1

hix2_zarr[:] = hix2

pc1_zarr[:] = pc_data1

pc2_zarr[:] = pc_data2pc_intersect('pc/hix1.zarr','pc/hix2.zarr','pc/hix.zarr')

pc_intersect('pc/hix1.zarr','pc/hix2.zarr','pc/hix.zarr',pc2='pc/pc2.zarr', pc='pc/pc.zarr',prefer_1=False)

hix_zarr = zarr.open('pc/hix.zarr',mode='r')

pc_zarr = zarr.open('pc/pc.zarr',mode='r')

np.testing.assert_array_equal(hix_zarr[:],hix)

np.testing.assert_array_equal(pc_zarr[:],pc_data)2025-09-18 12:29:04 - log_args - INFO - running function: pc_intersect

2025-09-18 12:29:04 - log_args - INFO - fetching args:

2025-09-18 12:29:04 - log_args - INFO - idx1 = 'pc/hix1.zarr'

2025-09-18 12:29:04 - log_args - INFO - idx2 = 'pc/hix2.zarr'

2025-09-18 12:29:04 - log_args - INFO - idx = 'pc/hix.zarr'

2025-09-18 12:29:04 - log_args - INFO - pc1 = None

2025-09-18 12:29:04 - log_args - INFO - pc2 = None

2025-09-18 12:29:04 - log_args - INFO - pc = None

2025-09-18 12:29:04 - log_args - INFO - shape = None

2025-09-18 12:29:04 - log_args - INFO - chunks = None

2025-09-18 12:29:04 - log_args - INFO - prefer_1 = True

2025-09-18 12:29:04 - log_args - INFO - processes = False

2025-09-18 12:29:04 - log_args - INFO - n_workers = 1

2025-09-18 12:29:04 - log_args - INFO - threads_per_worker = 1

2025-09-18 12:29:04 - log_args - INFO - dask_cluster_arg = {}

2025-09-18 12:29:04 - log_args - INFO - fetching args done.

2025-09-18 12:29:04 - zarr_info - INFO - pc/hix1.zarr zarray shape, chunks, dtype: (1000,), (200,), int64

2025-09-18 12:29:04 - zarr_info - INFO - pc/hix2.zarr zarray shape, chunks, dtype: (800,), (200,), int64

2025-09-18 12:29:04 - pc_intersect - INFO - loading idx1 and idx2 into memory.

2025-09-18 12:29:04 - pc_intersect - INFO - calculate the intersection

2025-09-18 12:29:04 - pc_intersect - INFO - number of points in the intersection: 73

2025-09-18 12:29:04 - pc_intersect - INFO - write intersect idx

2025-09-18 12:29:04 - pc_intersect - INFO - write done

2025-09-18 12:29:04 - zarr_info - INFO - pc/hix.zarr zarray shape, chunks, dtype: (73,), (200,), int64

2025-09-18 12:29:04 - pc_intersect - INFO - no point cloud data provided, exit.

2025-09-18 12:29:04 - log_args - INFO - running function: pc_intersect

2025-09-18 12:29:04 - log_args - INFO - fetching args:

2025-09-18 12:29:04 - log_args - INFO - idx1 = 'pc/hix1.zarr'

2025-09-18 12:29:04 - log_args - INFO - idx2 = 'pc/hix2.zarr'

2025-09-18 12:29:04 - log_args - INFO - idx = 'pc/hix.zarr'

2025-09-18 12:29:04 - log_args - INFO - pc1 = None

2025-09-18 12:29:04 - log_args - INFO - pc2 = 'pc/pc2.zarr'

2025-09-18 12:29:04 - log_args - INFO - pc = 'pc/pc.zarr'

2025-09-18 12:29:04 - log_args - INFO - shape = None

2025-09-18 12:29:04 - log_args - INFO - chunks = None

2025-09-18 12:29:04 - log_args - INFO - prefer_1 = False

2025-09-18 12:29:04 - log_args - INFO - processes = False

2025-09-18 12:29:04 - log_args - INFO - n_workers = 1

2025-09-18 12:29:04 - log_args - INFO - threads_per_worker = 1

2025-09-18 12:29:04 - log_args - INFO - dask_cluster_arg = {}

2025-09-18 12:29:04 - log_args - INFO - fetching args done.

2025-09-18 12:29:04 - zarr_info - INFO - pc/hix1.zarr zarray shape, chunks, dtype: (1000,), (200,), int64

2025-09-18 12:29:04 - zarr_info - INFO - pc/hix2.zarr zarray shape, chunks, dtype: (800,), (200,), int64

2025-09-18 12:29:04 - pc_intersect - INFO - loading idx1 and idx2 into memory.

2025-09-18 12:29:04 - pc_intersect - INFO - calculate the intersection

2025-09-18 12:29:04 - pc_intersect - INFO - number of points in the intersection: 73

2025-09-18 12:29:04 - pc_intersect - INFO - write intersect idx

2025-09-18 12:29:04 - pc_intersect - INFO - write done

2025-09-18 12:29:04 - zarr_info - INFO - pc/hix.zarr zarray shape, chunks, dtype: (73,), (200,), int64

2025-09-18 12:29:04 - pc_intersect - INFO - select pc2 as pc_input.

2025-09-18 12:29:04 - pc_intersect - INFO - starting dask local cluster.

2025-09-18 12:29:04 - pc_intersect - INFO - dask local cluster started.

2025-09-18 12:29:04 - dask_cluster_info - INFO - dask cluster: LocalCluster(dashboard_link='http://10.211.48.4:8787/status', workers=1, threads=1, memory=256.00 GiB)

2025-09-18 12:29:04 - zarr_info - INFO - pc/pc2.zarr zarray shape, chunks, dtype: (800, 3), (200, 1), complex64

2025-09-18 12:29:04 - darr_info - INFO - pc_input dask array shape, chunksize, dtype: (800, 3), (800, 1), complex64

2025-09-18 12:29:04 - pc_intersect - INFO - set up intersect pc data dask array.

2025-09-18 12:29:04 - darr_info - INFO - pc dask array shape, chunksize, dtype: (73, 3), (73, 1), complex64

2025-09-18 12:29:04 - pc_intersect - INFO - write pc to pc/pc.zarr

2025-09-18 12:29:04 - zarr_info - INFO - pc/pc.zarr zarray shape, chunks, dtype: (73, 3), (200, 1), complex64

2025-09-18 12:29:04 - pc_intersect - INFO - computing graph setted. doing all the computing.

2025-09-18 12:29:04 - pc_intersect - INFO - computing finished.0.1s

2025-09-18 12:29:04 - pc_intersect - INFO - dask cluster closed.pc_diff

pc_diff (idx1:str, idx2:str, idx:str, pc1:str|list=None, pc:str|list=None, shape:tuple=None, chunks:int=None, processes=False, n_workers=1, threads_per_worker=1, **dask_cluster_arg)

Get the point cloud in idx1 that are not in idx2. pc_chunk_size and n_pc_chunk are used to determine the final pc_chunk_size. If non of them are provided, the n_pc_chunk is set to n_chunk in idx1.

| Type | Default | Details | |

|---|---|---|---|

| idx1 | str | grid index or hillbert index of the first point cloud | |

| idx2 | str | grid index or hillbert index of the second point cloud | |

| idx | str | output, grid index or hillbert index of the union point cloud | |

| pc1 | str | list | None | path (in string) or list of path for the first point cloud data |

| pc | str | list | None | output, path (in string) or list of path for the union point cloud data |

| shape | tuple | None | image shape, faster if provided for grid index input |

| chunks | int | None | chunk size in output data,optional |

| processes | bool | False | use process for dask worker or thread |

| n_workers | int | 1 | number of dask worker |

| threads_per_worker | int | 1 | number of threads per dask worker |

| dask_cluster_arg | VAR_KEYWORD |

Usage:

pc_data1 = np.random.rand(1000,3).astype(np.float32)+1j*np.random.rand(1000,3).astype(np.float32)

gix1 = np.random.choice(np.arange(100*100,dtype=np.int32),size=1000,replace=False)

gix1.sort()

gix1 = np.stack(np.unravel_index(gix1,shape=(100,100)),axis=-1).astype(np.int32)

gix2 = np.random.choice(np.arange(100*100,dtype=np.int32),size=800,replace=False)

gix2.sort()

gix2 = np.stack(np.unravel_index(gix2,shape=(100,100)),axis=-1).astype(np.int32)

gix, iidx1 = mr.pc_diff(gix1,gix2)

pc_data = np.empty((gix.shape[0],*pc_data1.shape[1:]),dtype=pc_data1.dtype)

pc_data[:] = pc_data1[iidx1]

gix1_zarr = zarr.open('pc/gix1.zarr',mode='w',shape=gix1.shape,dtype=gix1.dtype,chunks=(200,1))

gix2_zarr = zarr.open('pc/gix2.zarr',mode='w',shape=gix2.shape,dtype=gix2.dtype,chunks=(200,1))

pc1_zarr = zarr.open('pc/pc1.zarr',mode='w',shape=pc_data1.shape,dtype=pc_data1.dtype,chunks=(200,1))

gix1_zarr[:] = gix1

gix2_zarr[:] = gix2

pc1_zarr[:] = pc_data1pc_diff('pc/gix1.zarr','pc/gix2.zarr','pc/gix.zarr')

pc_diff('pc/gix1.zarr','pc/gix2.zarr','pc/gix.zarr',pc1='pc/pc1.zarr', pc='pc/pc.zarr')

gix_zarr = zarr.open('pc/gix.zarr',mode='r')

pc_zarr = zarr.open('pc/pc.zarr',mode='r')

np.testing.assert_array_equal(gix_zarr[:],gix)

np.testing.assert_array_equal(pc_zarr[:],pc_data)2025-09-18 12:29:05 - log_args - INFO - running function: pc_diff

2025-09-18 12:29:05 - log_args - INFO - fetching args:

2025-09-18 12:29:05 - log_args - INFO - idx1 = 'pc/gix1.zarr'

2025-09-18 12:29:05 - log_args - INFO - idx2 = 'pc/gix2.zarr'

2025-09-18 12:29:05 - log_args - INFO - idx = 'pc/gix.zarr'

2025-09-18 12:29:05 - log_args - INFO - pc1 = None

2025-09-18 12:29:05 - log_args - INFO - pc = None

2025-09-18 12:29:05 - log_args - INFO - shape = None

2025-09-18 12:29:05 - log_args - INFO - chunks = None

2025-09-18 12:29:05 - log_args - INFO - processes = False

2025-09-18 12:29:05 - log_args - INFO - n_workers = 1

2025-09-18 12:29:05 - log_args - INFO - threads_per_worker = 1

2025-09-18 12:29:05 - log_args - INFO - dask_cluster_arg = {}

2025-09-18 12:29:05 - log_args - INFO - fetching args done.

2025-09-18 12:29:05 - zarr_info - INFO - pc/gix1.zarr zarray shape, chunks, dtype: (1000, 2), (200, 1), int32

2025-09-18 12:29:05 - zarr_info - INFO - pc/gix2.zarr zarray shape, chunks, dtype: (800, 2), (200, 1), int32